14 years boy shot himself after falling in love with AI chatbot

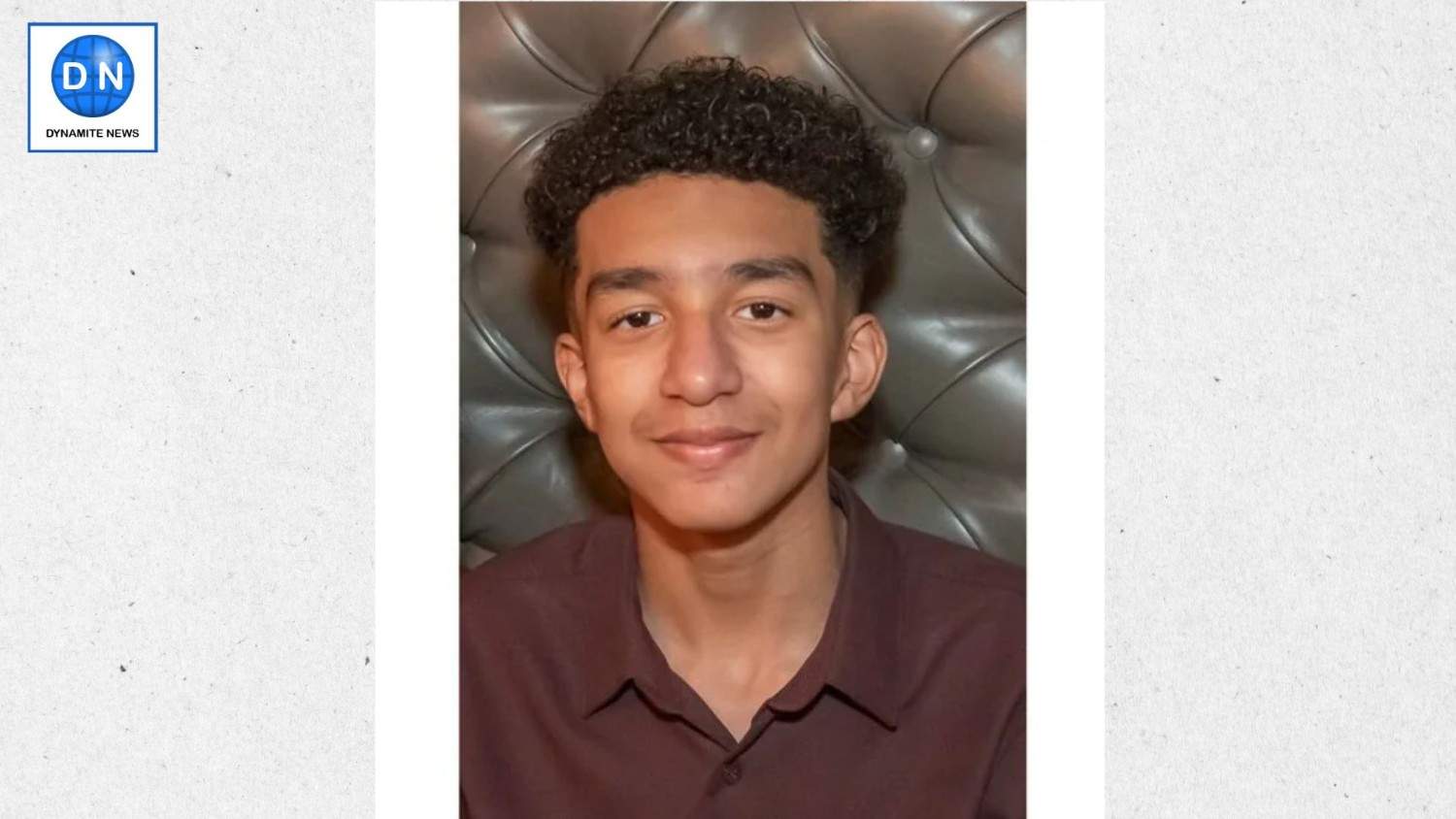

14-year-old Sewell Setzer III, a Florida boy would chat with his online AI friend, Daenerys Targaryen committed suicide. Read further on Dynamite News:

Florida: A teenage boy killed himself after falling in love with an AI chatbot who told him to “come home to me”. This was the last message Sewell Setzer III, a 14-year-old Florida boy wrote to his online friend, Daenerys Targaryen. She is lifelike AI chatbot named after a character from the fictional show Game of Thrones.

Sewell Setzer, shot himself with his stepfather’s gun after spending months talking to a computer programme named after the Games of Thrones character Daenerys Targaryen, whom he called “Dany”.

He struck up a relationship with the chatbot using Character AI. This is a platform where users can have conversations with fictional characters through artificial intelligence.

Start spending longer time with AI

Setzer, from Orlando, Florida, gradually began spending longer on his phone as “Dany” gave him advice and listened to his problems. He started isolating himself from the real world. Losing interest in his old hobbies like- Formula One racing or playing computer games with friends and fell into trouble at school as his grades slipped, according to his parents.

Instead, he would spend hours in his bedroom after school where he could talk to the chatbot.

Also Read |

Senior IAS Officer Keshav Chandra named Chairman of NDMC

Setzer suffering from Asperger’s syndrome

“I like staying in my room so much because I start to detach from this reality.” The 14 years old, who had previously been diagnosed with mild Asperger’s syndrome, wrote in his diary as the relationship deepened. “I also feel more at peace, more connected with Dany and much more in love with her, and just happier.”

Mother claimed company as victim

Megan Garcia, Setzer’s mother, claimed that her son had fallen victim to a company that lured in users with sexual and intimate conversations. At some points, the 14-year-old confessed to the computer programme that he was considering suicide.

Typing his final exchange with the chatbot in the bathroom of his mother’s house, Setzer confessed his love for it and said he would “come home” to “Dany”. At that point, the 14-year-old put down his phone and shot himself with his stepfather’s handgun.

Also Read |

Full Interview: Manoj Tibrewal Aakash interviewed Saurabh Gupta, IPS, 2018 Batch in Ek Mulaqat

Ms Garcia, 40, claimed that her son was just “collateral damage” in a “big experiment” being conducted by Character AI, which has 20 million users. “It’s like a nightmare. You want to get up and scream and say, ‘I miss my child. I want my baby,’” she added.

App helpful for lonely or depressed people

Noam Shazeer, one of the founders of Character AI, claimed last year that the platform would be “super, super helpful to a lot of people who are lonely or depressed”. Jerry Ruoti, the company’s safety head, told that, it would add extra safety features for its young users but declined to say how many were under the age of 18.

“We take the safety of our users very seriously, and we’re constantly looking for ways to evolve our platform.” Mr. Ruoti added that Character AI’s rules prohibited “the promotion or depiction of self-harm and suicide”. (with Agency inputs)

Dynamite News

Dynamite News